Overview

Affective Computing Group @ MIT Media Lab

Developing a LLM that translates the naive coder into an animation developer using Processing code and biosensor signals. Contributions include training and refining the model, creating demonstrations of the live animations, creating the project site, contributing to academic papers, analyzing data and overseeing user testing.

System Overview

The system pairs a code-generating LLM with a live animation runtime. Users describe the effect they want in natural language; the model proposes Processing code; a thin runtime executes and binds code variables to incoming biosignal features so visuals adapt in real time.

- Inputs: natural-language intent; streaming biosignal features.

- LLM stage: prompt scaffolds + guardrails to yield concise, idiomatic Processing code.

- Runtime: executes sketch; safely hot-reloads; exposes signal features to the sketch.

- Adaptation: mapping layer normalizes features and routes them to visual params.

My Contributions (by area)

- Modeling: prompt library; style hints for draw()/setup(); safe utility stubs; auto-retry on compile/runtime errors.

- Signals: feature normalization and simple smoothing so visuals don’t “flicker” with noise.

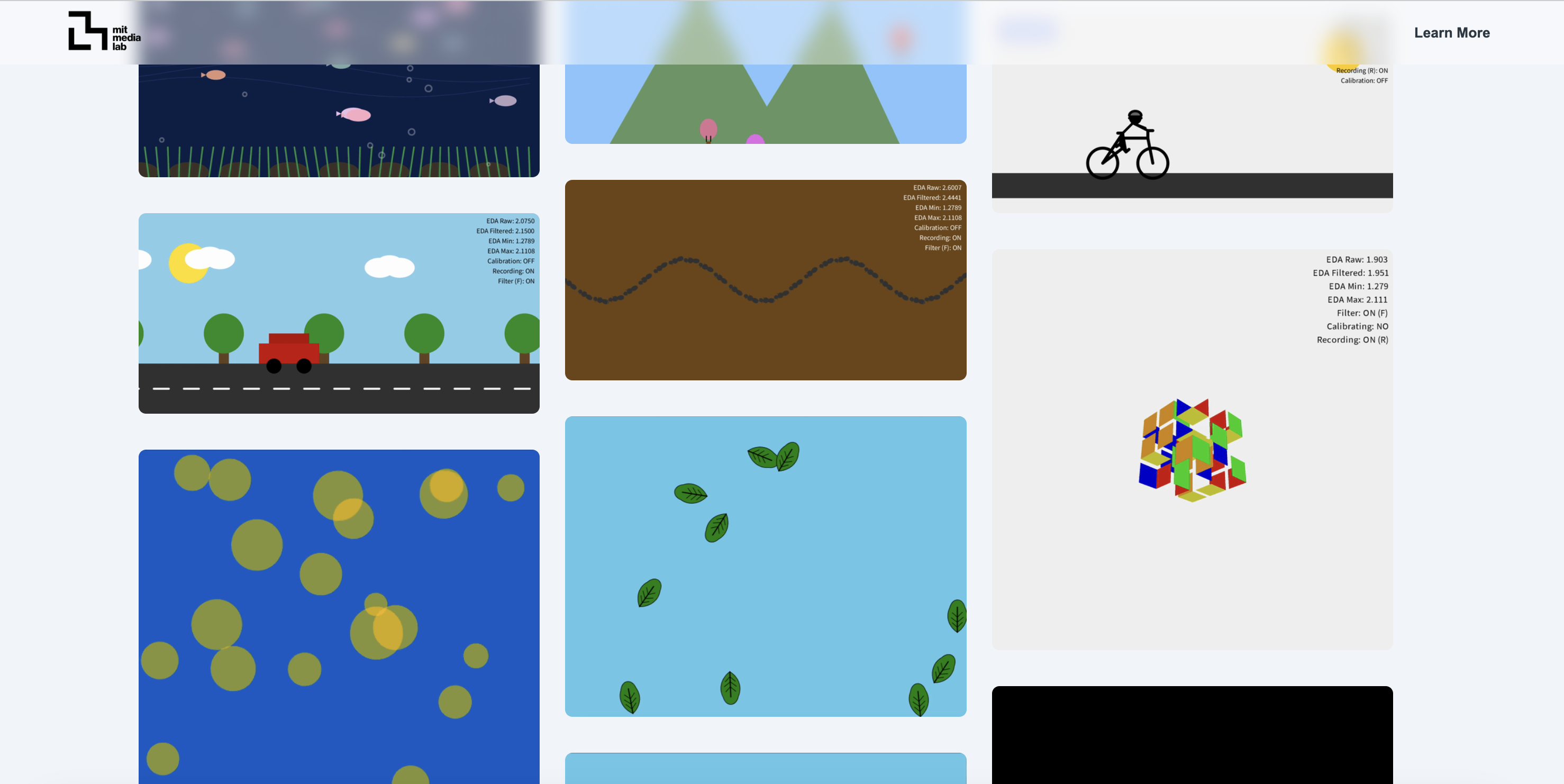

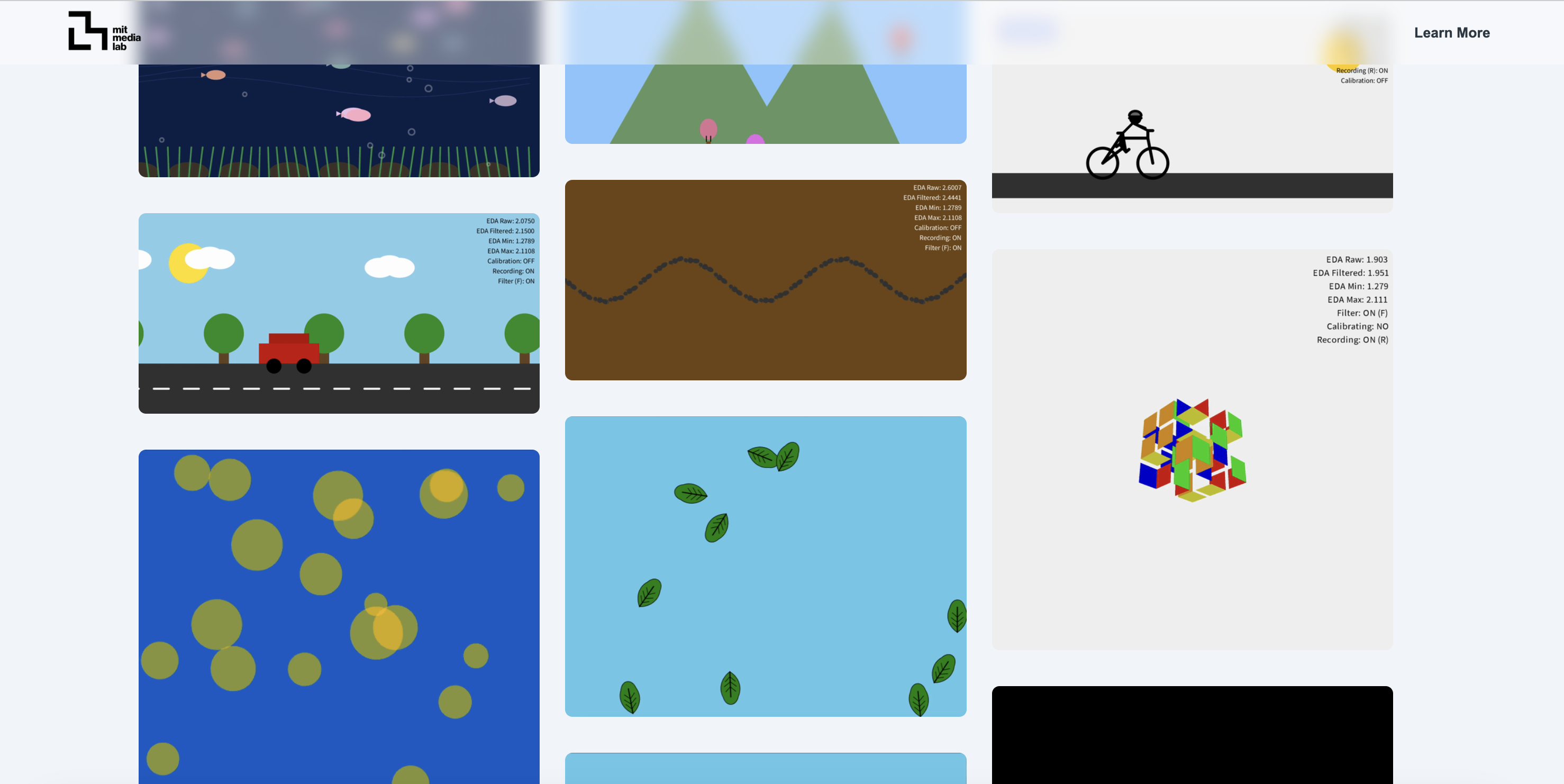

- Demos: parameterized sketches (particles, waves, fields, typography) that clearly show the effect of each feature.

- Documentation: setup guides, mapping examples, troubleshooting tips for new contributors.

Summer 2025 — What I Built

- Model training & refinement: crafted prompt templates for animation tasks, tuned outputs toward valid, runnable Processing, and added auto-fixes plus quick tests to catch errors.

- Biosignal → animation mapping: pipelined live sensor features into visual parameters (e.g., palette, motion speed, particle density), enabling affective, responsive sketches.

- Live demos & tooling: produced a gallery of Processing sketches that respond to signals in real time; built small utilities to preview/record runs and swap mappings quickly.

- Data analysis & user testing: set up lightweight logging, analyzed session data to find failure modes (syntax, long outputs, noisy signals), iterated prompts and safeguards; helped plan and conduct user tests.

- Docs & site: wrote contributor-facing guides and built a public-facing site to showcase interactive examples.

- Writing: contributed figures and method sections for in-progress publications.

Demos & Links

User Testing — Key Findings

- Shorter prompts with explicit visual goals produce more reliable code.

- Users value a parameter “cheat sheet” (which features map to which effects) while iterating.

- Most failures clustered around abstract concepts and vague descriptions → fixed via guardrail templates and auto-includes.

Impact

- Reduced turnaround from idea → running sketch; increased proportion of first-try runnable generations.

- Made live signal demos stable enough for instalations and user sessions.

- Shipped a publicly viewable site with examples and documentation.

What’s Next ➡️ Fall 2025

Interoception Improvement

For those with Autism Spectrum Disorder (ASD), the ability to understand, name, or recognize the sensations signifying different emotional states is often impaired. More granularly, the ability to recognize internal states such as hunger, a fast heartbeat, or the need to use the bathroom may be diminished as well. This project proposes that these abilities can be increased in those with ASD by teaching them to recognize the feeling of their internal stress response (as measured by electrodermal activity) when it is coupled with a bespoke visualization specific to their areas of comfort and interest.